Beyond ChatGPT: Using LLMs to Accelerate Your CAD Work

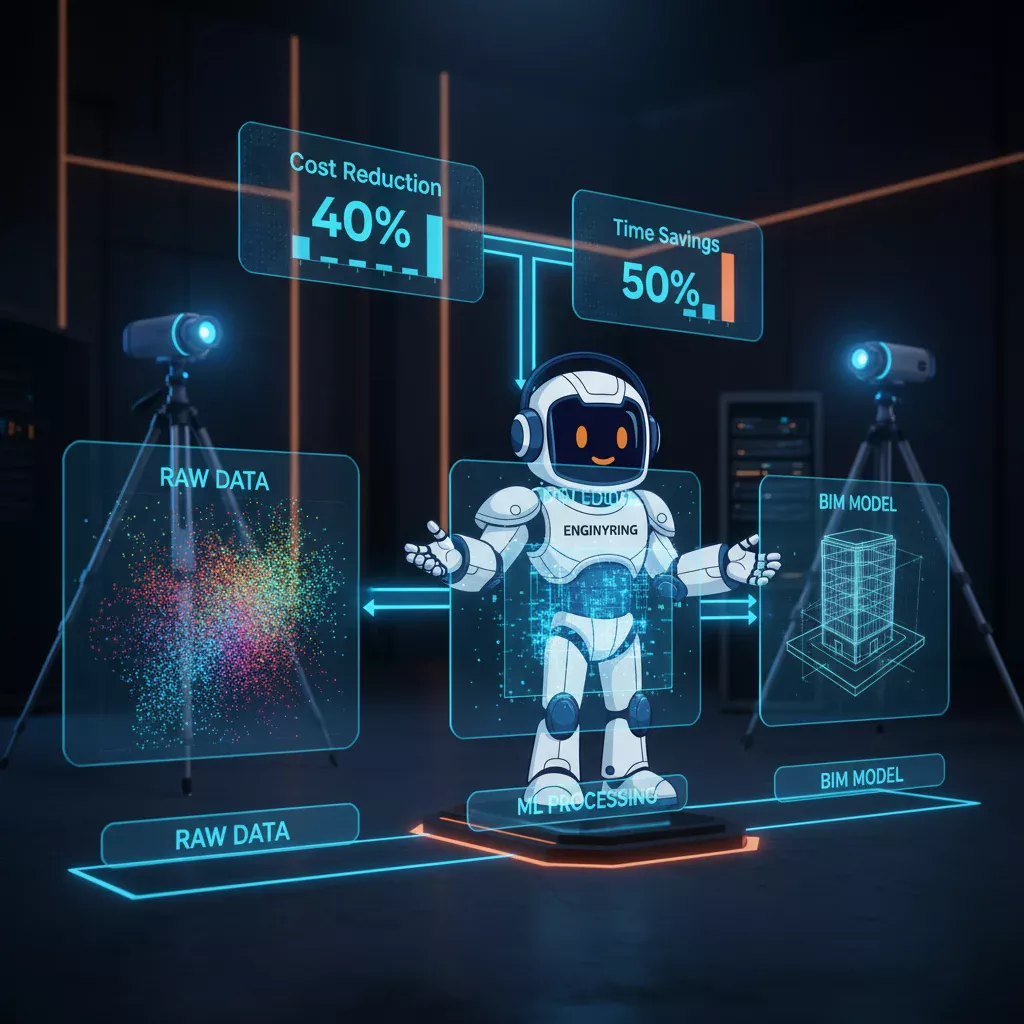

Large Language Models (LLMs) transform CAD drafting workflows by automating script generation, technical documentation, and drawing analysis through natural language interfaces. Engineers and architects leverage ChatGPT, Claude, and specialized CAD AI tools to generate Dynamo Python scripts, validate drawing standards, and accelerate BIM documentation without extensive programming knowledge. These applications deliver 40-85% time reductions for repetitive tasks including parametric family creation, batch operations, and quality control protocols. LLMs democratize automation enabling immediate productivity gains without specialized plugin investments or lengthy implementation cycles.

CAD professionals face mounting pressure to deliver faster while maintaining accuracy and documentation standards. Traditional automation requires programming expertise creating barriers for designers focused on technical work rather than software development. Manual documentation consumes hours extracting information from drawings and coordinating across platforms. Drawing analysis for quality control and standards compliance remains tedious and error-prone. These workflow inefficiencies compound across projects limiting capacity and profitability.

LLM technology addresses these challenges through conversational interfaces translating plain language requests into functional code, analysis workflows, and documentation templates. This article demonstrates practical implementations delivering measurable value across script generation, drawing analysis, documentation automation, and workflow optimization. ENGINYRING integrates LLM-assisted workflows with scan-to-BIM services combining automated modeling with intelligent post-processing accelerating project delivery while maintaining quality standards.

Script generation for Dynamo and Revit

LLMs excel at generating Python scripts for Dynamo and Revit automating parametric family creation, schedule manipulation, and batch operations through natural language descriptions. Request "create a Dynamo script that renames all sheets based on their discipline parameter" and receive functional code within seconds. The technology translates intent into implementation eliminating programming syntax barriers for designers lacking software development backgrounds.

Script generation workflow begins with precise problem descriptions including inputs, desired transformations, and expected outputs. Specify "Python script for Revit API that collects all doors, filters by fire rating parameter, and generates schedule sorted by level then room number." LLMs generate working code handling API syntax, object filtering, and data structures. Include relevant context like Revit version, existing parameter names, and desired output format improving first-attempt success rates from 60% to 85%.

ArchiLabs demonstrates advanced implementation generating visual Dynamo node graphs from text descriptions rather than just Python code. The platform interprets "extract room areas from architectural model and populate linked Excel spreadsheet" creating complete node workflows with geometry extraction, data processing, and export operations. This approach suits users preferring visual programming while maintaining natural language interface accessibility.

Code validation represents critical implementation phase preventing errors in production environments. Test LLM-generated scripts on isolated sample projects before deploying to active work. Request explanatory comments asking "add detailed comments explaining each section's purpose" enabling understanding and future modifications. Iteratively refine through follow-up prompts like "modify to handle walls without fire rating parameter gracefully" addressing edge cases discovered during testing.

Real implementation demonstrates value through sheet creation automation case study. Architectural firm automated title block population, view placement, and sheet naming reducing manual effort from 3 hours to 45 minutes per project representing 70% time savings. The Dynamo script generated through iterative LLM conversations handled project-specific naming conventions and organizational standards. Initial generation required 15 minutes with 30 minutes refinement and testing delivering immediate positive ROI on first deployment.

Effective LLM prompt structure for script generation:

Context: "I'm using Revit 2024 API with Python"

Task: "Create script that batch updates parameter values"

Inputs: "Element category, parameter name, new value"

Logic: "Filter elements, check parameter exists, update value"

Error handling: "Skip elements lacking specified parameter"

Output: "Transaction report showing updated count"

Example prompt:

"Generate Revit Python script for Revit 2024 that updates

'Fire Rating' parameter for all doors in active view.

Input: new fire rating value as string.

Handle doors without this parameter by skipping gracefully.

Output: count of successfully updated doors.

Include transaction handling and error reporting."Limitations include complex geometric constraints requiring multiple refinement iterations. LLMs struggle with intricate parametric relationships involving conditional geometry, nested families, or advanced mathematical transformations. These scenarios benefit from hybrid approaches where LLMs generate foundational code structure while humans add sophisticated geometric logic. Memory limitations affect large projects requiring context about numerous existing parameters, families, or project standards necessitating focused, incremental requests.

Technical drawing analysis and OCR

LLMs combined with computer vision extract structured information from technical drawings automating dimension reading, symbol identification, and specification extraction. Upload DWG or PDF files requesting "extract all dimensions and create CSV with element type, dimension value, and coordinate location." The system processes drawings identifying text, dimensional annotations, and standard symbols generating machine-readable data for downstream analysis or BIM integration.

Automated drawing analysis workflow integrates OCR technology with LLM interpretation. Computer vision identifies text regions and geometric elements. LLMs contextualize extracted information distinguishing dimensions from notes, room numbers from reference markers, and specifications from general annotations. Request "analyze this floor plan identifying rooms with area calculations and door/window counts per room" receiving structured output suitable for database import or validation against BIM models.

Error detection applications identify drawing discrepancies, missing elements, and standards violations through automated comparison. Upload architectural and structural drawings requesting "identify coordinate mismatches between grid lines on both drawings." LLMs analyze extracted data flagging inconsistencies that manual review might miss. Specify organizational standards through prompts like "validate layer naming against AIA CAD Layer Guidelines highlighting violations" ensuring compliance without tedious manual checking.

BIM integration leverages analyzed drawing data accelerating model creation from legacy documentation. Extract wall dimensions, door schedules, and equipment locations from 2D drawings feeding information into automated modeling workflows. Combine with scan-to-BIM automation where LLMs process drawing annotations while point clouds provide geometric accuracy. This hybrid approach delivers comprehensive as-built documentation merging measured reality with original design intent.

Cost estimation applications automate quantity takeoffs from analyzed drawings. Request "extract all concrete wall dimensions calculating total square footage by thickness category" receiving immediate estimates. Compare extracted quantities against BIM model schedules identifying discrepancies between design documentation and constructed model. This validation catches errors early preventing costly field corrections and change orders.

Implementation requires quality input ensuring drawings contain clear, legible text and standard symbols. Scanned drawings at 300+ DPI deliver better OCR accuracy than low-resolution PDFs. Poorly organized drawings with inconsistent annotation practices reduce extraction reliability. Preprocessing through contrast enhancement and noise reduction improves results for aged or degraded documents. Plan for 15-25% manual verification particularly for critical dimensions and specifications.

Documentation and standards assistance

LLMs accelerate technical documentation creation generating standard text, specification templates, and quality control protocols from brief descriptions. Request "create construction specification section for cast-in-place concrete walls including materials, execution, and quality assurance" receiving CSI MasterFormat-compliant text requiring minor project-specific customization. This capability reduces specification writing time by 50-70% while maintaining professional standards and completeness.

Layer naming convention generation ensures CAD standard compliance across projects and teams. Provide organizational standards or reference AIA guidelines requesting "generate complete layer naming structure for commercial office building including architectural, structural, MEP, and site disciplines." LLMs produce comprehensive layer lists with descriptions preventing ad-hoc naming that creates downstream coordination problems. Export results as template DWG files distributed to project teams ensuring consistency from project initiation.

Block library creation automates standard detail development from verbal descriptions. Specify "create title block with project name, sheet number, drawn by, checked by, and revision table fields sized for ARCH D sheet" receiving DWG-compatible code or detailed creation instructions. Generate complete detail libraries requesting "produce standard construction details for wood frame wall sections including insulation, vapor barriers, and finishes" accelerating project setup and ensuring detail consistency.

Quality control checklist generation produces project-specific validation protocols. Describe project type, delivery phase, and organizational requirements receiving comprehensive review criteria. Request "create BIM quality control checklist for LOD 350 hospital project including model completeness, clash detection, and standards compliance items" obtaining actionable validation procedures. Tailor generic checklists through follow-up prompts adding client-specific requirements or regulatory compliance items.

Annotation automation assistance improves dimensioning consistency and completeness. Describe drawing type and dimensioning requirements receiving placement strategies and annotation standards. Request "recommend dimensioning approach for reflected ceiling plan showing grid spacing, fixture locations, and ceiling heights" obtaining professional guidance reflecting industry best practices. Query style decisions like "AIA dimensioning standards for interior elevations" resolving team debates through authoritative reference.

Command translation and workflow optimization

Natural language CAD command interfaces translate verbal requests into platform-specific syntax eliminating memorization of complex command sequences. Type "how do I create array of objects along path in AutoCAD" receiving precise command syntax with parameter explanations. This accessibility particularly benefits occasional users, new team members, or professionals working across multiple CAD platforms with different command conventions.

Cross-platform translation assists workflows involving multiple software packages. Request "translate this AutoCAD LISP routine to Revit Python" receiving equivalent functionality for different environments. Describe workflows in one platform asking for implementation approaches in another like "I use AutoCAD's QSELECT to filter elements; what's the Revit equivalent approach" bridging knowledge gaps when transitioning between tools.

Macro generation creates custom routines from verbal descriptions without manual coding. Specify "create AutoCAD macro that sets specific layers current, draws rectangle from two picked points, adds center mark, and returns to previous layer" receiving complete macro code ready for button assignment. Generate Revit ribbon customizations automating frequently used command sequences improving efficiency through personalized interfaces.

Repetitive task identification leverages LLM pattern recognition suggesting automation opportunities. Describe daily workflows requesting optimization recommendations. State "I manually update 50+ title blocks weekly changing project phase and revision information" receiving suggestions for parameter-driven automation or data-linked approaches. LLMs propose solutions non-programmers might not consider democratizing process improvement across skill levels.

Troubleshooting assistance accelerates problem resolution through intelligent diagnosis. Describe error messages or unexpected behaviors receiving potential causes and solutions. Query "Revit warns 'cannot create element' when placing family; family loads successfully but won't place" obtaining systematic troubleshooting steps addressing common causes. This support reduces downtime and reliance on specialized technical support particularly for less common issues.

Command translation example workflows:

AutoCAD → Revit:

Q: "How do I do AutoCAD XREF functionality in Revit?"

A: "Use Link Revit command (Insert tab > Link panel).

Options: Positioning (Auto-Origin/Center/Manual),

Copy/Overlay similar to XREF, Shared Coordinates for

coordination. Manage links through Manage Links dialog."

Natural language → Command syntax:

Q: "Rotate multiple objects around common point Revit"

A: "Select elements → Modify tab → Rotate command →

Click rotation center → Specify angle or pick points.

For precise: Type angle value after clicking center.

For array: Use 'Copy' option before specifying angle."

Workflow optimization query:

Q: "Faster way to apply view templates to 50 views?"

A: "Use View Template properties browser: Select multiple

views in Project Browser (Ctrl+Click) → right-click →

Apply View Template → select template. Alternatively,

generate Python script for batch application with filters."Parametric design code generation

LLMs generate parametric modeling code enabling rapid design iteration through text-based geometry definitions. Request "create parametric truss geometry with adjustable span, height, and bay spacing" receiving code producing adaptive structural models. This capability suits conceptual design exploration where multiple geometric variants require evaluation before detailed development. Text-to-3D generation accelerates early-phase iteration reducing time spent on manual geometry creation.

CADmium demonstrates text-to-model workflows where natural language descriptions generate browser-based 3D CAD models. Describe "rectangular base 100x50mm with four cylindrical supports 10mm diameter extending 30mm vertically" receiving immediate geometric visualization. Modify through conversational refinement stating "increase support height to 40mm and add 5mm fillets at base connections" seeing real-time updates. This approach suits rapid prototyping and design communication where speed prioritizes over precision.

FreeCAD automation through Python script generation extends parametric capabilities in open-source environments. Request "FreeCAD Python script creating parametric window family with adjustable width, height, and mullion spacing" receiving code compatible with FreeCAD's API. These scripts enable building custom object libraries without commercial software licensing costs. Combine generated scripts with organizational standards creating consistent, maintainable parametric components.

Design iteration workflows leverage rapid code modification exploring geometric variations. Generate base design through initial prompt then request modifications like "adjust roof pitch from 4:12 to 6:12 and extend overhangs to 600mm." LLMs update code rather than requiring manual geometry manipulation. This text-based iteration suits early design phases where multiple alternatives require quick evaluation. Document successful variations building design option libraries for future reference.

Geometric validation ensures LLM-generated designs maintain manufacturability and construction feasibility. Request "add validation checks ensuring all walls maintain minimum 100mm thickness and door openings don't exceed structural span limits." LLMs incorporate constraint checking preventing invalid geometry generation. Manual verification remains essential particularly for structural adequacy, code compliance, and constructability. Treat AI-generated geometry as starting points requiring professional engineering validation before documentation.

Limitations include complex organic geometries, intricate Boolean operations, and advanced surface modeling. LLMs excel at rectilinear and standard geometric primitives but struggle with freeform surfaces, complex lofts, or computationally intensive operations. Hybrid workflows combining LLM-generated parametric frameworks with manual surface modeling deliver optimal results. Processing speed limits real-time interaction for complex assemblies requiring batch generation approaches for large component libraries.

Practical implementation framework

Successful LLM integration requires systematic implementation balancing experimentation with validation protocols. Begin identifying repetitive tasks consuming significant time offering automation potential. Prioritize workflows with clear inputs, defined logic, and predictable outputs maximizing first-attempt success rates. Document current manual processes establishing baseline time measurements for ROI calculation. Select appropriate LLM platforms balancing capability, cost, and organizational data security requirements.

Platform selection considers several factors including technical capability, integration options, and pricing models. ChatGPT Plus (€20/month) provides general-purpose capability suitable for script generation and documentation tasks. Claude Pro offers larger context windows beneficial for analyzing lengthy specifications or complex code. Specialized CAD AI tools like CADAI (€50-200/month) provide domain-specific training potentially improving first-attempt accuracy for CAD-specific tasks. Evaluate free tiers before committing to paid subscriptions validating capability against organizational needs.

Validation protocols prevent deployment of flawed automation creating downstream problems. Establish testing environments isolated from production projects. Generate scripts through LLM conversations then execute against sample data verifying intended behavior. Document unexpected results requesting modifications through iterative prompts. Require peer review for scripts affecting multiple projects or critical data. Maintain version control tracking prompt history enabling reproduction and refinement of successful generations.

Prompt template libraries accelerate repeated operations ensuring consistency across team members. Document successful prompts for common tasks creating organizational knowledge base. Structure templates with variable fields like "[PROJECT_TYPE]" and "[LOD_LEVEL]" enabling quick customization. Share templates through internal wiki or documentation system preventing redundant prompt development. Include validation criteria and testing procedures alongside prompts ensuring quality maintenance as team members apply templates.

Implementation roadmap 12 weeks:

Week 1-2: Discovery and Setup

□ Identify 5 repetitive tasks >2 hours/week each

□ Measure current completion time baseline

□ Select LLM platform and create account

□ Review security/data handling policies

□ Establish testing environment

Week 3-4: Initial Experimentation

□ Generate scripts for simplest identified task

□ Test outputs on sample projects

□ Refine prompts documenting iterations

□ Calculate time savings vs manual approach

□ Create first prompt template

Week 5-6: Template Library Development

□ Generate prompts for remaining identified tasks

□ Standardize template structure

□ Document validation procedures

□ Begin team training on template usage

□ Establish code review process

Week 7-8: Production Pilot

□ Deploy 2-3 validated workflows to real projects

□ Monitor for errors and edge cases

□ Collect team feedback on usability

□ Refine templates based on real-world use

□ Calculate actual ROI from implementations

Week 9-10: Expansion

□ Identify additional automation opportunities

□ Train broader team on successful workflows

□ Develop advanced prompts for complex tasks

□ Integrate with existing project templates

□ Document lessons learned

Week 11-12: Optimization and Standardization

□ Refine underperforming workflows

□ Create comprehensive template library

□ Establish ongoing training program

□ Define metrics tracking continued value

□ Plan next phase expansion areasTraining programs develop organizational capability ensuring value realization beyond early adopters. Conduct workshops demonstrating successful implementations building confidence in technology. Provide hands-on exercises where team members generate and test scripts under guidance. Create reference documentation with example prompts, common pitfalls, and troubleshooting guidance. Designate LLM champions within teams supporting peers and identifying new applications. Continuous learning adapts to evolving LLM capabilities and emerging use cases.

Cost benefit analysis and ROI

LLM investment delivers rapid ROI through time savings on repetitive tasks without significant capital expenditure. Subscription costs range €20-€200 monthly per user depending on platform and capability requirements. Implementation requires 10-20 hours staff time developing initial prompts and validation workflows. These minimal upfront investments contrast favorably with specialized plugins costing thousands annually or custom development requiring months and substantial budgets.

Time savings quantification measures value across multiple workflow categories. Script generation reduces development time 70-85% versus manual coding particularly for professionals with limited programming experience. A Dynamo script requiring 3 hours manual development completes in 30-45 minutes including LLM generation, testing, and refinement. Documentation tasks like specification writing accelerate 40-60% generating draft text requiring customization rather than starting from blank documents. Drawing analysis automating dimension extraction saves 2-4 hours per drawing set depending on complexity.

Quality improvements complement time savings delivering additional value. Automated standard compliance checking reduces errors 30-50% catching violations manual review might miss. Consistent documentation templates improve deliverable quality and coordination. Reduced manual data entry decreases transcription errors preventing downstream corrections. These quality gains translate to reduced rework, fewer RFIs, and improved client satisfaction though specific monetary value varies by project.

ROI calculation example demonstrates payback timeline for typical implementations. Ten-person team using ChatGPT Plus (€200/month total) implementing script automation saving each member 3 hours weekly. At €75/hour average cost, monthly savings reach €9,000 (10 users × 3 hours/week × 4 weeks × €75/hour). Subtracting €200 subscription cost yields €8,800 monthly net benefit achieving positive ROI within first month. Payback period extends 1-2 months accounting for initial implementation time investment.

ROI calculation framework:

Implementation Costs:

LLM subscription: €20-€200/month per user

Initial setup: 10-20 hours @ internal rate

Template development: 15-30 hours @ internal rate

Training: 5-10 hours per team member

Total first month: €500-€3,000 typical range

Monthly Recurring Value:

Script generation: 2-5 hours saved per user

Documentation: 3-6 hours saved per user

Analysis/QC: 1-3 hours saved per user

Total monthly: 6-14 hours per user

ROI Example (5-person team):

Costs: €100 subscription + €200 training = €300/month

Savings: 5 users × 8 hours × €75/hour = €3,000/month

Net monthly benefit: €2,700

Payback period: <1 month

Annual ROI: >1,000%

Break-even threshold:

Minimum 1.5 hours saved per user monthly

At €75/hour: €112.50 value vs typical €20-40 cost

Extremely low adoption barrierHidden benefits extend beyond direct time savings including knowledge democratization and capability development. Junior staff access expertise through LLM guidance accelerating proficiency development. Teams tackle automation projects previously requiring specialized programmers expanding organizational capability. Reduced reliance on external consultants for scripting needs builds internal self-sufficiency. These strategic advantages compound over time creating competitive differentiation and operational resilience.

Limitations and mitigation strategies

LLM constraints require understanding for realistic expectation setting and effective mitigation. Complex geometric operations involving intricate parametric relationships demand multiple refinement iterations. Initial generations provide foundational logic requiring human enhancement for sophisticated constraints. Memory limitations restrict context size affecting ability to process large existing codebases or comprehensive project standards. Version-specific API knowledge gaps create code targeting outdated or incorrect methods requiring validation against current documentation.

Hallucination risks represent significant concern where LLMs generate plausible but incorrect technical information. Fabricated API methods, incorrect parameter types, or invalid geometric operations appear syntactically correct but fail during execution. Mitigation requires systematic testing against real software environments rather than assuming correctness. Cross-reference critical technical specifications against authoritative sources. Maintain healthy skepticism particularly for unusual or complex requirements where training data might be sparse.

Iterative refinement workflows address limitations through conversational correction. Initial generation provides starting framework followed by testing revealing issues. Describe observed problems requesting modifications like "the script fails when elements lack the specified parameter; add error handling." Multiple iterations converge toward robust solutions with each cycle addressing edge cases. Document iteration history enabling reproduction and serving as training examples for similar future requests.

Hybrid AI and rule-based validation combines LLM flexibility with deterministic checking. Generate scripts through conversational interface then apply automated validation testing for common errors. Check for missing error handling, uninitialized variables, or unsafe operations. Validate geometric outputs against physical constraints ensuring dimensional feasibility. This layered approach catches errors before production deployment maintaining quality while leveraging LLM speed advantages.

Human oversight remains essential particularly for critical operations affecting safety, compliance, or significant cost. Designate experienced professionals reviewing LLM-generated structural calculations, code compliance interpretations, or safety-critical automation. Establish approval workflows preventing unreviewed AI outputs reaching production systems. Treat LLMs as powerful assistants augmenting rather than replacing professional judgment. Clear delineation of appropriate automation versus required human validation prevents overreliance and associated risks.

Project-specific fine-tuning where feasible addresses recurring accuracy issues with organizational standards. Some platforms support custom training on internal documentation, standards, and successful past implementations. Investment in fine-tuning justifies for large organizations with substantial proprietary methodologies. Smaller firms achieve adequate results through well-structured prompts incorporating relevant context without custom training investment. Evaluate cost-benefit based on error rates and correction time versus fine-tuning investment.

Integration with BIM and construction workflows

LLM integration with existing BIM workflows amplifies value through complementary capabilities. Combine LLM-generated scripts with Digital Twin platforms automating data extraction from operational sensors into BIM models. Generate middleware translating between IoT data formats and Revit parameters through natural language specifications. This integration enables non-programmers configuring complex data pipelines previously requiring specialized development.

Scan-to-BIM workflows benefit from LLM-assisted post-processing automation. Generate validation scripts comparing point cloud-derived models against design intent identifying deviations requiring attention. Automate classification of scan data into Revit categories through intelligent algorithms trained on element characteristics. Create custom quality control routines validating model completeness, element placement accuracy, and parameter population. ENGINYRING combines these automation capabilities with professional modeling services delivering comprehensive as-built documentation efficiently.

Clash detection automation extends beyond standard Navisworks workflows through intelligent conflict analysis. Generate scripts categorizing clashes by severity, trade responsibility, and resolution complexity. Automate report generation with clash descriptions, responsible parties, and recommended resolutions. Create custom filtering rules identifying project-specific conflict patterns requiring attention while suppressing acceptable tolerance clashes. This intelligent analysis reduces coordination meeting time focusing discussion on genuine issues.

Construction sequencing benefits from LLM-assisted 4D simulation script generation. Describe construction phasing logic requesting Navisworks or Synchro scripts implementing sequencing rules. Generate visual simulations demonstrating construction sequences for client presentations or contractor coordination. Automate quantity takeoff timing aligning material procurement with construction schedules. These applications support project planning and stakeholder communication improving coordination and reducing field conflicts.

Facility management preparation automates COBie data population and validation. Generate scripts extracting equipment information from BIM models formatting for FM system import. Validate required data completeness identifying missing specifications, maintenance requirements, or warranty information before project closeout. Create custom reports translating BIM data into facility management formats enabling smooth operational transition. This automation reduces closeout duration and improves delivered documentation quality supporting long-term building operations.

Security and data governance considerations

Data security represents critical concern when using cloud-based LLMs for proprietary project information. Public platforms like ChatGPT potentially use submitted data for model training creating intellectual property and confidentiality risks. Review terms of service understanding data retention, usage rights, and training policies. Enterprise accounts often provide enhanced privacy protections preventing training on organizational data. Evaluate compliance with client confidentiality agreements and organizational security policies before implementation.

Data sanitization protocols protect sensitive information while enabling LLM assistance. Remove client names, project locations, and proprietary specifications from prompts. Use generic placeholders like "[CLIENT]" or "[PROJECT]" maintaining context without exposing confidential details. Abstract specific dimensions and parameters to representative values preserving logical relationships without revealing actual design. Generate scripts and documentation templates using generic examples then manually customize with actual project data.

Local LLM deployment eliminates cloud transmission concerns for organizations with strict security requirements. Open-source models like Llama or Mistral run on organizational infrastructure preventing external data exposure. Performance and capability trade-offs exist with local models typically lagging commercial cloud services. Investment in local GPU infrastructure and model management adds cost but delivers complete data control. Evaluate security requirements versus capability needs determining appropriate deployment approach.

Access controls and usage policies govern organizational LLM adoption. Define approved platforms and use cases preventing ad-hoc tool selection creating security gaps. Establish guidelines for appropriate data sharing and prohibited content. Train staff on security implications and proper usage patterns. Monitor usage through approved platform accounts enabling oversight while maintaining productivity benefits. Balance security concerns with enabling innovation avoiding overly restrictive policies hindering value realization.

Compliance with regulatory requirements including GDPR, industry-specific data protection standards, and client contractual obligations requires careful evaluation. Document LLM usage in quality management systems addressing tool validation and output verification. Maintain audit trails showing human review of AI-generated content for regulated deliverables. Consult legal counsel regarding liability implications particularly for structural calculations, code compliance interpretations, or safety-critical applications. Proactive compliance prevents downstream legal or contractual complications.

Future trends and emerging capabilities

LLM technology continues rapid evolution with several breakthrough capabilities emerging. Multimodal models combining text, image, and 3D geometry understanding enable visual input processing. Upload hand sketches requesting "convert this sketch to parametric Revit family" receiving automated model generation from rough drawings. Point to drawing elements asking "what are the dimensions of this wall" receiving direct answers without manual measurement. These visual interfaces further reduce technical barriers democratizing automation.

Cloud-based CAD execution integrated with LLMs eliminates local software requirements. Browser-based platforms execute AI-generated scripts in cloud environments accessible from any device. Request "create floor plan meeting these requirements" receiving completed drawings without local CAD installation. Subscription models shift from software licensing to cloud computing consumption reducing upfront costs and enabling flexible scaling. This accessibility particularly benefits smaller firms and distributed teams.

Specialized CAD LLMs trained exclusively on engineering datasets improve domain-specific accuracy. Models fine-tuned on CAD documentation, API references, and engineering standards generate more reliable initial outputs requiring less refinement. Vendor-specific models trained on platform documentation produce code targeting current API versions with accurate method calls. Expect emergence of industry-specific LLMs for architecture, civil engineering, MEP, and other specialties improving first-attempt success rates.

Real-time collaboration with AI copilots embeds LLM assistance directly within CAD interfaces. Conversational panels within Revit or AutoCAD enable requesting assistance without switching applications. Voice-activated control allows hands-free operation during modeling stating commands naturally rather than memorizing syntax. AI suggests optimizations during design like "consider rotating building 15° for improved solar orientation" providing proactive guidance. This seamless integration makes AI assistance ubiquitous rather than requiring conscious tool switching.

Agent-based systems coordinate multiple specialized LLMs handling complex multi-step workflows. Request "analyze these drawings, identify errors, generate correction scripts, and produce validation report" triggering orchestrated process across analysis, scripting, and documentation agents. Each specialized agent handles its domain with master coordinator ensuring coherent overall workflow. This architectural approach tackles sophisticated automation scenarios beyond single-model capabilities enabling true end-to-end process automation.

Conclusion and implementation roadmap

LLMs represent accessible, high-value automation technology delivering immediate productivity gains for CAD professionals. The conversational interface eliminates programming barriers enabling designers and engineers automating repetitive tasks without specialized development skills. Time savings of 40-85% across script generation, documentation, and analysis tasks justify minimal subscription investments achieving positive ROI within weeks. Organizations implementing systematic LLM workflows gain competitive advantages through improved efficiency, enhanced quality, and expanded capability without proportional staffing increases.

Implementation success requires balancing experimentation with appropriate validation and governance. Start with clearly defined repetitive tasks offering obvious automation potential. Develop prompt templates and validation procedures ensuring reliable outputs before production deployment. Train teams on effective prompting techniques and appropriate use cases maximizing value realization. Establish security protocols protecting proprietary information while enabling innovation. Measure results quantifying time savings and quality improvements justifying continued investment and expansion.

ENGINYRING integrates LLM-assisted workflows with scan-to-BIM services combining automated point cloud processing with intelligent post-processing and documentation generation. Our approach leverages AI for script generation, quality validation, and deliverable preparation while maintaining professional oversight ensuring accuracy and completeness. This hybrid methodology delivers comprehensive as-built BIM documentation efficiently meeting project requirements and quality standards. The surveyor-neutral approach works with any scanning provider delivering consistent results regardless of data source.

Begin LLM implementation today identifying three repetitive tasks consuming over two hours weekly each. Experiment with ChatGPT or Claude generating initial scripts and documentation templates. Test outputs thoroughly establishing validation workflows before production use. Document successful prompts building organizational template libraries. Track time savings quantifying ROI justifying expanded adoption. The technology maturity and minimal investment barriers make LLM integration a strategic imperative for forward-thinking AEC organizations.

Contact ENGINYRING to discuss how AI-enhanced BIM workflows support your project delivery objectives. Our team demonstrates practical LLM applications for scan-to-BIM post-processing, quality validation, and documentation automation. Discover how combining professional modeling expertise with intelligent automation delivers superior results efficiently. Visit ENGINYRING scan-to-BIM services learning about comprehensive as-built documentation solutions leveraging latest automation technologies while maintaining uncompromising quality standards.

Source & Attribution

This article is based on original data belonging to ENGINYRING.COM blog. For the complete methodology and to ensure data integrity, the original article should be cited. The canonical source is available at: Beyond ChatGPT: Using LLMs to Accelerate Your CAD Work.